Content management error: Header Banners should not be placed in the Navigation placeholder!

AWS SageMaker Batch Transform Design Pattern

Marco Susilo Posted 24 December 2020Content management error: Generic Content Banners should not be placed in the Navigation placeholder!

This article was originally published by Marco Susilo on the TowardsDataScience publication.

A scale-able design pattern for machine learning batch transforms using AWS and SageMaker to embed advanced analytics into business applications.

A common scenario I encounter goes like the following:

An organisation’s team of data scientists have spent significant time and resources into fine tuning and training a machine learning model to solve a business problem. Finally they have settled on the one (or an ensemble/stack ensemble).

The business are thrilled to hear the great news. News spreads that a revolutionary technology is going to radically transform the business by improving profit by x% or improve efficiency by y% etc etc. Soon the news reaches the senior executives and they want it available now now now.

The team of data scientists are now in a predicament as they have done their part (creating a model) but the business cannot make use of just a model artifact, they need the model integrated into business applications. How can this be done?

The above scenario is often the result of a skills gap in the organisation where they have not planned how to deploy and integrate machine learning models into business applications in their tech environment. Luckily cloud vendors are picking up on this gap and are providing services and design patterns to fill this gap.

Below is a design pattern I have used, with great success, for scenarios where online prediction is not required and an application can get away with doing batch prediction in an AWS environment.

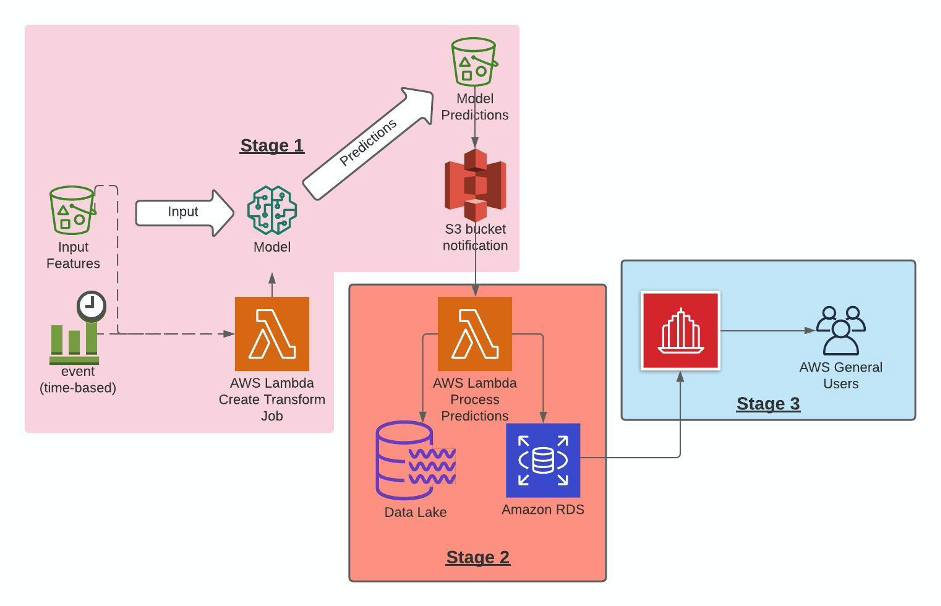

Image supplied by Author.

There are 3 main stages which I will go into more detail below...

Content management error: Generic Content Banners should not be placed in the Navigation placeholder!

Stage 1

The lambda function simply creates a transform job in SageMaker using the final model that the team of data scientist’s have agreed to be used for the business application. As part of the create_transform_job api call you can provide various parameters in order to scale up or down as required. Things that I tweak include InstanceType, InstanceCount, MaxConcurrentTransforms and the MaxPayloadInMB parameters. You can read more about what these parameters do as well as other parameters here.

When the API call is successful SageMaker will then process the input data and make predictions using the chosen model and output the model predictions into an S3 bucket that was specified which in turn will trigger the second lambda function that will process the prediction output.

Content management error: Generic Content Banners should not be placed in the Navigation placeholder!

Stage 2

When predictions start landing into the “Model Predictions” bucket in the diagram above it will trigger a second lambda function that I usually use to process the predictions. In this second lambda function you can do some post processing and output the model predictions into the organisation’s datalake and/or a database/data warehouse.

Some things I have experienced with is to experiment with reserved concurrency values in the lambda function. If it is too low you will be waiting a long time to process the model prediction results, too many and you may start getting errors with other AWS services eg. too many connections to RDS etc.

Content management error: Generic Content Banners should not be placed in the Navigation placeholder!

Stage 3

If you have reached this stage you are near the end of the tunnel. This stage is where users of the business application can consume the result of the new revolutionary machine learning technology.

Content management error: Generic Content Banners should not be placed in the Navigation placeholder!

Final Thoughts

The above design pattern is a small part of the architecture of a business application with embedded advanced analytics. There will be processes prior to this that extracts the data, transforms the data, enriches the data, move data through various systems etc. I have only focused on the relevant services to deploy a machine learning model.

Content management error: Generic Content Banners should not be placed in the Navigation placeholder!

We are global leaders